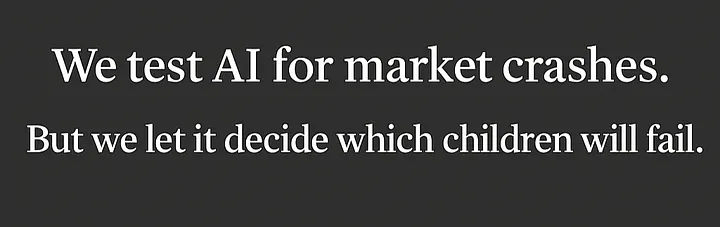

How a 12-year-old can be algorithmically labeled a “likely failure” while Wall Street gets bulletproof AI safeguards

The Algorithm Decides

Somewhere in America today, a machine learning model just flagged Marcus as a dropout risk. He’s twelve years old. The algorithm considered his zip code, his free lunch status, his father’s incarceration record, and the fact that he scored poorly on a standardized test while his grandmother was dying. The system assigned him a probability: 73% likely to drop out.

Marcus doesn’t know this. His teachers might not either. But the prediction is now in his permanent record, shaping how counselors allocate resources, how teachers unconsciously adjust their expectations, and how the school district plans its budget around him.

Meanwhile, three miles away on Wall Street, teams of PhD mathematicians are stress-testing trading algorithms through months of adversarial attacks, regulatory reviews, and sandbox simulations. They’re terrified that an unchecked algorithm might destabilize a market for even a few minutes.

We have our priorities exactly backwards.

The Financial AI Fortress

When JPMorgan Chase deploys AI for high-frequency trading, the system undergoes rigorous testing that would make a NASA engineer jealous. Regulators demand explainability reports. Risk management teams run thousands of simulations. There are kill switches, human oversight protocols, and mandatory cooling-off periods.

Why? Because we learned what happens when algorithms run amok in financial markets. The 2010 Flash Crash wiped out nearly a trillion dollars in market value in minutes when trading algorithms created a feedback loop. Now we have circuit breakers, position limits, and armies of compliance officers whose job is to make sure AI doesn’t break capitalism.

The irony is exquisite: We protect the wealth of investors with elaborate AI safeguards, but we let school districts deploy predictive models on children with all the regulatory oversight of a lemonade stand.

The Educational AI Wild West

In schools across America, “dropout prediction” models are making life-altering determinations about children based on data points that would make a mortgage lender blush. These systems consider:

- Demographic data: Race, ethnicity, and socioeconomic status

- Geographic markers: Home zip code and neighborhood crime statistics

- Family history: Parent education levels, previous siblings’ academic performance

- Behavioral proxies: Disciplinary records, attendance patterns, even library book checkout frequency

The stated goal is noble: identify at-risk students early so schools can intervene. But here’s what actually happens:

The algorithm becomes the intervention.

Teachers, consciously or not, begin treating “high-risk” students differently. Resources get shifted away from kids the system has written off. Self-fulfilling prophecies bloom like digital weeds. Children internalize the label when they inevitably discover it, because algorithmic predictions have a way of leaking into the very fabric of institutional culture.

The Absurdity of Our Standards

Let’s examine the regulatory environment for these two AI applications:

Financial AI:

- Mandatory stress testing and backtesting

- Regular audits by federal regulators

- Required explainability and transparency reports

- Human oversight and kill-switch protocols

- Strict liability for algorithmic failures

- Industry-wide standards and best practices

Educational AI:

- No federal oversight or standards

- No requirement for algorithmic auditing

- No mandate for explainable AI

- No standardized accuracy metrics

- No liability framework for false predictions

- No industry-wide ethical guidelines

The message is clear: We will move heaven and earth to protect your portfolio, but we’ll let an algorithm decide your child’s future with less oversight than a credit card approval.

The Human Cost of Algorithmic Recklessness

Sarah was flagged as a dropout risk in seventh grade. The algorithm noted her single mother, her free lunch status, her zip code. It missed her voracious reading habit, her mathematical intuition, her dream of becoming an engineer.

Her guidance counselor, influenced by the prediction, steered her toward “practical” vocational classes instead of advanced mathematics. Sarah internalized the message: She wasn’t college material. She fulfilled the algorithm’s prophecy, dropping out at seventeen.

She’s now thirty-four, working two minimum-wage jobs, wondering what might have been different if someone had believed in her instead of a statistical model.

This is not a hypothetical story. This happens thousands of times every day.

The Regulatory Double Standard

The financial industry fought hard against AI regulation, arguing it would stifle innovation and competitiveness. But when algorithmic trading nearly crashed global markets, regulators stepped in with strict oversight. Now the financial sector grudgingly admits these safeguards make the system more stable and trustworthy.

Education, meanwhile, remains the Wild West of AI deployment. EdTech companies promise miraculous insights while deploying algorithms with all the scientific rigor of astrology. School districts, desperate for solutions and starved of resources, implement these systems without understanding their limitations or biases.

We are beta-testing algorithmic judgment on the most vulnerable people in our society: children who cannot consent, cannot opt out, and cannot fight back when the system gets it wrong.

What Oversight Actually Looks Like

Imagine if we applied financial-grade AI standards to educational algorithms:

- Algorithmic audits: Independent testing for racial, economic, and geographic bias

- Explainability requirements: Schools must be able to explain exactly why a student was flagged

- Human review protocols: No automated decision-making without meaningful human oversight

- Accuracy standards: Regular validation against real-world outcomes

- Student data rights: Children and parents can access, challenge, and correct algorithmic assessments

- Liability frameworks: Companies and schools are held accountable for algorithmic harm

These aren’t radical proposals. They’re basic safeguards we already require for algorithms that affect adults’ financial lives.

The Path Forward

We don’t need to ban AI in education. We need to regulate it like we actually care about children’s futures.

Step 1: Federal oversight. The Department of Education should establish an AI safety office with the authority to set standards and investigate harmful deployments.

Step 2: Transparency mandates. Schools using predictive algorithms must disclose their use, methodology, and accuracy rates to parents and communities.

Step 3: Bias testing. Educational AI systems should undergo the same rigorous bias testing we require for financial algorithms.

Step 4: Student data rights. Children and families deserve the same algorithmic due process rights we extend to loan applicants.

Step 5: Corporate accountability. EdTech companies should face meaningful liability for algorithmic harm, just like financial firms do.

The Choice We Face

Every day we delay action, more children are being sorted into algorithmic castes. More Marcus and Sarah stories are being written by code instead of compassion.

We’ve proven we can regulate AI when we care about the outcomes. We’ve built elaborate safeguards to protect market stability. We’ve created oversight mechanisms to prevent financial systemic risk.

Now we need to decide: Are our children’s futures worth the same protection as our stock portfolios?

The choice is binary. We can continue treating kids like beta tests for untested algorithms, or we can extend them the same AI safety standards we demand for adults’ money.

One protects wealth. The other protects human potential.

The algorithm is watching. The question is: Are we?

© 2025 Deusdedit Ruhangariyo

Founder, Conscience for AGI

Author, URRP Moral Atlas Vol. 1–6

ORCID: 0000–0005–3581–2994